The 2026 AI Data Engineer Roadmap

And how to avoid getting replaced

AI has made manually writing complex data pipelines mostly obsolete.

If AI can generate pipelines, DAGs, tests, and even migrations…

What’s left for data engineers to actually work on?

Conceptual knowledge is no longer “nice to have.” It’s the entire job.

In this article, we’ll cover:

How AI is actually impacting data engineering in 2026 (here’s 2025 as a reference)

Which responsibilities are accelerating vs. eroding

How AI coding agents (Cursor, AdaL, Claude Code, Copilot Workspace) change daily work

The design patterns and best practices that now matter more because of AI

A delicious summary infographic at the end

This edition is brought to you by:

DataExpert.io is launching a free Vibe Coding Boot Camp on February 14th and 15th! You’ll get free credits to use AdaL CLI, and we’ll build a fully fledged capstone product together in two days!

How AI impacts data engineering in 2026

The question has shifted from:

“Will AI take data engineering jobs?”

to:

“Which parts of data engineering are now table stakes, and which are still scarce?”

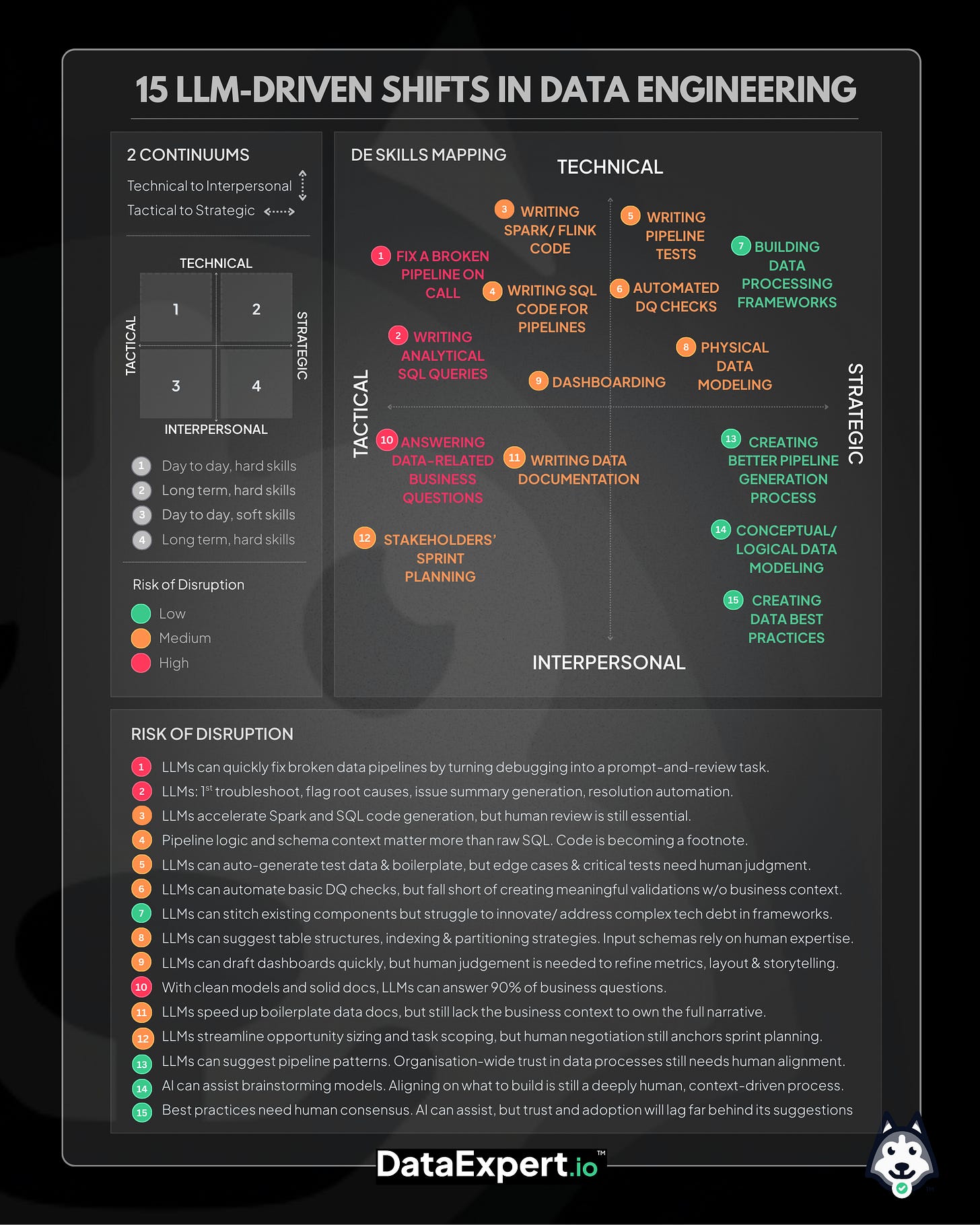

A useful way to think about this is still along four axes (Tactical, Strategic, Soft-skills, Technical). Compared to 2025, some of the risk levels have changed.

Technical + Tactical (short-term, hands-on execution)

Writing Spark / SQL code

High-medium risk of disruption (up from medium in 2025)

In 2026:

AI reliably writes production-grade SQL, Spark, dbt, and Flink code

Most engineers are no longer the fastest code writers in the room

Reviewing, validating, and shaping the code is the real work

If your value was syntax mastery, that value is now commoditized.

Fixing broken on-call pipelines

Very high risk of disruption (up from high in 2025)

Most failures are:

Schema drift

Memory/config issues

Late or duplicated data

Bad quality checks

AI agents are now very good at:

Root-cause classification

Suggesting fixes

Auto-tuning retries and thresholds

This is a huge burnout reducer — but also means on-call heroics are no longer a moat.

Technical + Strategic (long-term system design)

Building data processing frameworks

Low risk of disruption (unchanged from 2025)

AI still struggles with:

Large-scale refactors

Deep tech debt

Performance tradeoffs across layers

Human constraints (org structure, infra politics)

Try giving an agent a 5-year-old Airflow monorepo and a vague goal. It still falls apart.

Humans remain essential here.

Automated data quality & observability

Medium-high risk of disruption (up from medium in 2025)

AI is now very good at:

Generating expectations

Detecting anomalies

Proposing checks

But it’s still bad at deciding what actually matters.

Business semantics, risk tolerance, and trust thresholds remain human decisions.

Writing tests

Medium risk of disruption (unchanged from 2025)

AI excels at:

Generating fixtures

Happy-path tests

Synthetic data

Humans still own:

Edge cases

Regulatory scenarios

Business-critical correctness

Soft skills + Tactical (day-to-day collaboration)

Sprint planning

Medium risk of disruption (unchanged from 2025)

AI helps with:

Estimation

Dependency mapping

Drafting plans

But prioritization is still political, contextual, and human.

Writing documentation

Medium-high risk of disruption (up from medium in 2025)

In 2026:

AI maintains docs continuously

Boilerplate is fully automated

Drift detection is common

What’s left:

Narrative

Intent

Tradeoffs

“Why this exists”

Answering business questions

Very high risk of disruption (unchanged, now real)

If:

Data models are correct

Metrics are defined

Docs are accessible

Then AI answers 90–95% of business questions instantly.

The data engineer’s role shifts from:

answering questions

to

designing systems that answer questions correctly

Soft skills + Strategic (long-term influence)

Designing pipeline generation processes

Low risk of disruption (unchanged from 2025)

Deciding:

Who can deploy

What needs review

How trust is built

…is still fundamentally a human consensus problem.

Conceptual data modeling

Low risk of disruption (unchanged from 2025, now more valuable)

AI can brainstorm schemas.

It cannot:

Align stakeholders

Resolve semantic disputes

Decide what should exist

This is now one of the highest-leverage skills in data engineering.

Creating data best practices

Low risk of disruption (unchanged from 2025)

Anything that requires:

Org buy-in

Cultural change

Long-term trust

…remains stubbornly human.

The pattern is clear

The last things AI disrupts are:

Strategy

Semantics

Governance

Trust

The first things it disrupts are:

Syntax

Glue code

Repetition

Heroics

How AI coding agents change data engineering

Development speed is now 2–10× faster, assuming you know what to ask for.

The workflow is no longer:

write code then debug then repeat

It’s:

specify intent then review then correct then institutionalize

Prompting pattern that works in 2026

Inputs → Technologies → Design Pattern → Constraints → Best Practices

Example:

Given this schema

CREATE TABLE users (user_id BIGINT, country VARCHAR, date DATE)Create an Airflow DAG using Trino that implements SCD Type 2 on

country.Requirements:

Idempotent

Write-audit-publish

Partition sensors

Backfill safe

Late data handling

If you can articulate intent clearly, agents now deliver shockingly good results.

What this means for data engineers

If you used to pride yourself on writing “nasty SQL”…

That advantage is gone.

The new advantage is knowing what to build and why.

Design patterns that matter most (2026 edition)

In order of leverage:

Dimensional modeling (Kimball) (a free four-hour course on modeling large volume fact data)

Metric-layer-first modeling (a free one-hour course on growth metrics and a free one-hour course on funnel metrics)

Slowly Changing Dimensions (Type 2+) (a free 45-minute lab on building a fully idempotent SCD type 2 table)

One Big Table data modeling (a great article about the tradeoffs)

Microbatch & dedup pipelines (a free Github repo explaining it)

Real-time (Kappa / Flink) (a free three-hour course on streaming)

Feature store architectures

Reverse ETL & activation pipelines

Best practices that survived AI

Because they’re about trust, not code.

Data modeling

Clear naming

Stable metrics

Explicit ownership (the MIDAS process at Airbnb was amazing for ownership)

Data lakes

Parquet

Compression (here’s a YouTube video about how I compressed a Parquet table from 100 TBs to 5 TBs)

Partitioning

Retention discipline

No duplicate “source-of-truths”

DAGs

Idempotency (a free one-hour course on why this makes pipelines idempotent)

Backfill safety (a great article about avoiding backfill nightmares)

Merge/overwrite semantics

Late data awareness

Serving layers

Pre-aggregation

Low-latency stores for dashboards

No raw data in BI engines

The bottom line

AI didn’t kill data engineering.

It killed pretending data engineering was about typing code

The future data engineer:

Thinks in systems

Speaks business

Designs trust

Uses AI as a force multiplier

We teach all of these patterns and best practices in DataExpert.io Academy — both self-paced and cohort-based.

Our next cohort starts February 16th and covers Databricks, AI-native pipelines, Iceberg, and Delta Live Tables.

The first 5 people to use code AI2026 at DataExpert.io get 30% off the subscription or live bootcamp.

Great read!

Thanks for sharing.